Home

Research

Research Facility

High Performance Computing (HPC)

Research Facilities

HPC

University of Kerala

High Performance Computing

High Performance Computing most generally refers to the practice of aggregating computing power in a way that delivers much higher performance than one could get out of a typical desktop computer or workstation in order to solve large problems in science, engineering, or business.

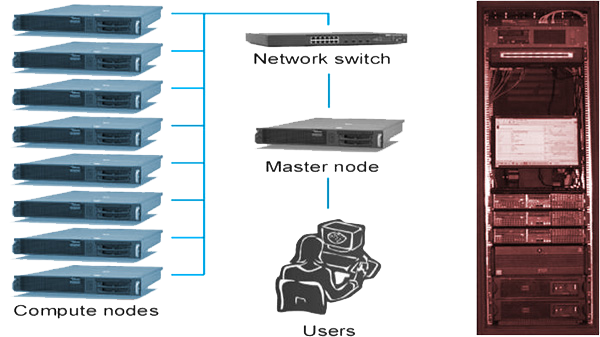

Architecture

A Rocks cluster (Rocks version 6.1 with Cent OS 6.3-64 bit version) which is an implementation of "Beowulf" cluster, running Sun Grid scheduler for job submissions. It has 10 nodes: - a master node with 24GB of RAM and nine compute nodes with 8 GB RAM each. Each node is a dual six-core Intel®XeonE5645 series 2.40GHz rack server.

This cluster having Front end nodes and Compute nodes. Front end nodes are where users login in, submit jobs, compile code, etc. This node can also act as a router for other cluster nodes by using network address translation (NAT). Compute nodes are the workhorse nodes. Rocks management scheme allows the complete OS to be reinstalled on every compute node in a short amount of time (~10 minutes). These nodes are not seen on the public Internet.

Computational work is submitted from the login/master node to the compute nodes by users via a batch system. The cluster is accessed remotely via SSH. Users authenticate (i.e., login) using an SSH client; after successful authentication a command-line interface is presented. This can be used to submit computational jobs to the batch system queues.

This cluster uses 10-gigabit Ethernet networks for MPI traffic. This network is especially designed to provide the fastest message passing systems available at bandwidths of multiple gigabytes per second. Also it uses shared data storage which helps the management overhead of a large cluster can be significantly reduced as there is no need to copy data to every node in the cluster for running jobs.

There is a facility to view the cluster from the website. Which will shows the configuration of the nodes and its current status.

Protecting Data and Information

The software installed on the HPC cluster is designed to assist multiple users to efficiency share a large pool of compute nodes , ensuring that resources are fairly available to everyone within certain configured parameters. Security between users and groups is strictly maintained, providing you with mechanisms to control which data is shared with collaborators or kept private.

When using the HPC cluster, you have a responsibility to adhere to the information security policy for your site which outlines acceptable behaviour and provides guidelines designed to permit maximum flexibility for users while maintaining a good level of service for all.But users are encouraged to:

- Change your system password as regularly as possible

- Only access machines that you have a valid requirement to use

- Adhere to your site information security policy

- Make backup copies of important or valuable data

- Remove temporary data after use

Authentication and Security

For the cluster, you will have a username and password to enable you to authenticate and run computational jobs. For security reasons, as soon as you have received your credentials for a system, you should login and change your password.

Secure Shell (SSH) is used to connect to remote computers, i.e., to authenticate (login) and interact with the remote system.

File Transfer

It is likely that you will wish to upload files to the HPC system, or download them to your desktop/laptop. Linux users can do this by using the OpenSSH utilities suite like WinSCP.

Running Computational Jobs

The HPC system is a shared computational resource. To ensure everyone gets a fair share and to allow the system to function correctly:

- All computationally-intensive work must be submitted to the batch system's queues - computationally-intensive processes run on the login node will be killed without warning. (Low intensity work, such as editing, is of course perfectly accessible on the login node.)

- A consequence of this is that most computational work must be carried out in batch mode, i.e., non-interactively. For example, if using Matlab, the required computation must be done by asking Matlab to run a Matlab programme, rather than by starting the (interactive) application interface (e.g., GUI).

- The HPC systems use SGE (Sun Grid Engine) as the batch system

Users can run a number of different types of job via the cluster scheduler, including Batch jobs, Array jobs, SMP jobs, Parallel jobs and Interactive jobs.

Monitoring and Managing Jobs

When submitting jobs to the batch system, one may wish to know: Are my jobs running? What other jobs are running? Which queues are busy? When will my job run?

It may be necessary to remove a waiting job from the queue, or to stop a running job. This also can be done in this system.